This is a long form blog post of my conference talk of the same name. Slides can be found here.

Single Page Applications (SPAs) are trendy with developers but product owners, account managers, and marketing folks are sometimes hesitant to get on board. Why? SEO.

SEO or search engine optimization is the practice of increasing traffic to your website through unpaid search results. You may also hear this called "organic search traffic.” It’s important because you want everyone to find you. As developers, this should be important because what’s the point of spending hundreds of hours building an application if no one can find it. You want to improve how often you appear in search results for relevant search terms and how high you rank.

SPAs get a pretty bad rap for SEO, but they’re not necessarily bad for SEO. Building a SPA is not a death sentence for your search rankings. You just need to be aware of how you build your site to support it.

In SEO, content is queen. This can make SPAs challenging because content is rendered on an as-needed basis instead of on page load like traditional web pages.

Meta content

Meta content such as title, description, keywords, and canonical tags are used as the display text in search results and affect how search engines rank the URL for specific search terms. Make sure that you are building your application in a way that your meta content is dynamic depending on the URL.

As an aside, canonical tags can be particularly important in SPAs since using Javascript means our URLS are not limited by pages. If you’re not familiar canonical tags, they indicate to search engines which URL is the primary URL for content and help prevent your site from being penalized for duplicate content. If you have a situation where you can access the same content via multiple unique routes, such as yourapp.com/books/:id/author/ and yourapp.com/authors/:id you’ll want to use canonical tags to indicate which of these URLs is primary for this content and which should be ranked and presented in the search results. It’s also important to note that unique URLs include URLs that differ by query parameters, www vs non-www, and http vs https.

Correct routing

While we’re talking about URLs, make sure that you have your app configured correctly to support deep linking. Backlinking is a big way that search engines evaluate your SEO value. Backlinking is the practice of linking to other pages of your site and can be done within other URLs on your site or by other sites on the web. You want to make sure that when the search crawler follows backlinks and tries to crawl deep links to your site, it can read the correct content. If your deep links aren’t working correctly and the content isn’t being rendered or the wrong content is being rendered, your search ranking will suffer.

Avoid long running scripts

Avoid long running scripts on initial content load. Search crawlers will not wait very long for content to load. If your initial page load takes to long for content to render, the search crawler won’t index anything and this will hurt your search value. This is pretty key for SPAs since the initial load takes the longest, but after that the site is lickety split. But if that load takes too long, you’ve already lost the search bot at that point. (Trivia: GoogleBot uses an accelerated JavaScript timer. If any of your scripts are time-dependent, be aware they may malfunction for Google.)

Don’t block scripts in robots.txt

Over the last few years with the rising popularity of SPAs, Googlebot and other search crawlers are now claiming to be able to execute client side Javascript. However if you block your Javascript files in robots.txt, the crawlers won’t be able to even try to execute it and your content won’t be indexed and ranked.

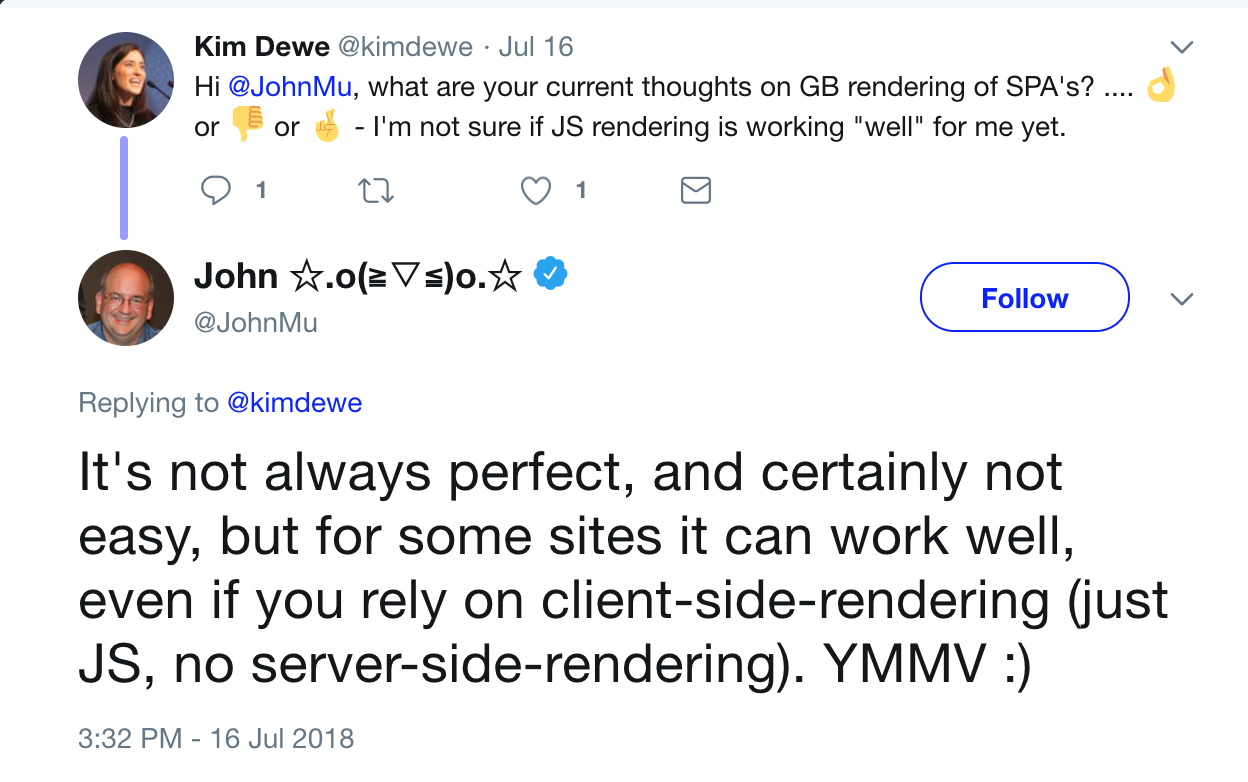

Despite the major search engines claiming to be able to execute client side Javascript, in practice it still seems to be spotty. There are numerous posts online about this not working well yet. Even Google’s Webmaster Trend Analyst acknowledged this summer that it’s not perfect. But its getting better and will continue to do so.

If after all of this, you still aren’t seeing the search ranking that you want, here are some tools you can use to improve the SEO of your site even more.

Fetch as Google

Google has a “Fetch as Google” tool that will let you see exactly what the GoogleBot is able to crawl and render. This can help identify why certain areas of your app aren’t being indexed properly.

Prerendering

Prerendering let’s you prerender the markup for a URL and serve that content to crawlers like a static site. Then your SPA Javascript can mount over the already existing markup.

There are 2 main methods of prerendering. The first is to prerender as part of your build process. You render the markup for each URL and save it as a static asset in your app. Then the static asset is rendered for the first page load of a user’s session, the SPA mounts over the top of it and your Javascript takes over from there.

The second method is to use SaSS offerings. This is a good choice when you have content that changes frequently (more often that your build process runs). The SaSS then serves the prerendered site to search crawlers. This can come dangerously close to cloaking (crawlers get different content than people) so be careful here. I use the prerender-spa-plugin package on this site. I saw my Google search ranking improve from bottom of page 4 to near top of page 1 within days of implementing it.

But prerendering can grind your build process to a halt if you have hundreds or thousands of URLs to render.

Server-side rendering

If you know you’ll be building a large site, you’ll want to server-side render it.

Server-side rendering (SSR) is where a page is rendered by the web server as part of the server request/response cycle. In the case of Vue.js and other similar frameworks, this is done by executing the app against a virtual DOM.

There are frameworks that can make building an SSR app relatively easy (like Next.js or Next.js). However, migrating a client side app to be SSR can be a labor-intensive challenge.

The key to SSR is designing your code to be “universal” i.e. it must work in both the browser and in a server-based JavaScript environment. This means any code that expects browser APIs and objects like window to be available, will not work. Frameworks like Next.js abstract this heavy lifting from the developer.

Currently SSR is only really supported with a Node backend. There are some plugins for PHP, but they’re not great and to be honest, I couldn’t find much more information than that.

SEO is a discipline all its own and requires a lot of time and attention. As developers, we should be aware of what our SEO goals are while we’re building our applications. Not only is it important from the perspective of our product owners, but we should want people to be able to find the awesome apps we’re spending hundreds of hours building.

This post is not meant to be an exhaustive guide to SEO for SPAs or any websites in general. I encourage you to read further and learn more about this. I’m always happy to chat about ideas and am excited to learn more as well. Tweet me @mercedescodes if you have thoughts you want to share.